A common question being considered by many people I’m talking to is “to what extent should we adopt public cloud services, and to what extent do we build ourselves?”. Cloud services exist on a spectrum from simple IaaS “provision of a virtualised instance” through Platform-as-a-Service offerings such as managed databases and API management platforms, all the way through to highly differentiated - and highly proprietary - Software-as-a-Service business applications.

Adopting cloud services, especially higher value services, can deliver massive value by cutting time-to-value of digital solutions, providing better, more robust services, and allowing businesses to stick to what they are good at. But at the same time, many IT functions are very nervous about cloud lock-in. Cloud services will be remarkably sticky, either through direct lock-in through use of proprietary functionality, APIs and tools, or more subtle dependencies - data has gravity and achieving escape velocity will be hard in many situations. Given the pay-as-you-go model under which most cloud services are consumed, if a cloud vendor drops out of growth mode, they are going to have a number of pricing knobs they can turn - should they wish - until their customers’ eyes are watering. Growth will not continue forever, and although exploiting customers in this way would be writing a suicide note for a cloud business in the longer term, stranger things have happened and it’s impossible to discount that a cloud vendor might suddenly have a need to boost short term profit at their customers’ expense.

Some are also concerned about the business continuity risk of a cloud vendor suddenly going out of business completely. I feel this is mostly a concern with small vendors. At least AWS and Azure are rapidly becoming such critical infrastructure that they are “too big to fail” - in reality, they would probably have to be backstopped at the government level to prevent economic chaos from anything less than a controlled wind-down.

I believe there is no one right answer to this trade-off that will suit all businesses and all systems. Most enterprises will need to adopt a mix of approaches for different situations and over different timescales; the key is to have a clear strategy and roadmap. It is also important to be pragmatic; a common request is for complete cloud independence, but unfortunately this is - at the moment, at any rate - essentially a utopia. It is possible to reduce lock-in, but there is generally a substantial cost to doing so - either in up-front investment, to stand up an equivalent cloud-neutral reusable service, or in lost delivery productivity and unrealised business value.

It may be very sensible to source from multiple cloud vendors, ensuring that commercial relationships are in place to enable new deployments to choose and to maintain leverage. However, individual applications and services, once deployed, are quite unlikely to move unless the need becomes acute. IT has always had to place bets on vendors, and there is no magic bullet that will change that in the cloud case.

An important point is that lock-in is not all or nothing; it is a spectrum. For example, to pick a few AWS services:

- Elastic Beanstalk represents a low lock-in: it can be passed standard deployment artefacts. Moving to a different PaaS will have some impact on continuous delivery pipeline configurations, and impact management processes, but little more.

- Lambda is similar to Elastic Beanstalk, as similar services exist on other clouds (albeit not at quite the same level of maturity, at time of writing).

- Amazon RDS can represent a medium lock-in, depending on the flavour of RDS selected - it provides high value at the operational level, and other clouds do not currently have equivalent managed database offerings. Aurora, while it is MySQL compatible at a protocol level, is a distinct proprietary fork and therefore not available outside Amazon: applications will at least require substantial functional and non-functional regression testing as part of porting to another cloud against MySQL or one of its variants. In other cases, moving from Amazon to another cloud would typically require writing management scripts equivalent to what RDS provides, testing failover/backup etc. - however, it is worth pointing out that all this is just deferring work that you’d have to do anyway if you chose to avoid RDS for lock-in reasons.

- Use of Infrastructure-as-a-Service offerings such as EC2, EBS, ELB etc. represents a medium lock-in, as automation of IaaS services can take substantial periods of time, and even though some tools such as Terraform can orchestrate multiple clouds, the scripts all end up being cloud dependent. While there have been attempts to build IaaS cloud abstraction layers, the problem is that the lowest common denominator that these abstraction layers target is generally too low to be useful.

- Services like Dynamo currently represent a high level of lock-in, with both a proprietary API and no production-class compatible non-AWS alternative. However, I predict that given its popularity, in future viable API-compatible alternatives will emerge. One of the first Amazon services, S3, originally was in the same position, but now you can easily stand up your own open source or proprietary S3 compatible object store. Depending on your application use cases, it may also be possible to introduce an application-level abstraction layer.

Of course, Software-as-a-Service propositions are just as proprietary as the packaged enterprise applications they replace, with the additional rider that you can’t carry on using them (even in unsupported mode) if the vendor relationship is terminated for any reason. For this reason, having business continuity plans laid down in advance - typically a back-up of key data in a form that could be easily migrated into an alternative service or package, combined with a viable way of end-users accessing it in the meantime - is critical.

One temptation is to try to own the problem internally in its entirety by building a private (or perhaps a hybrid) cloud. However, my experience suggests that for most organisations, this isn’t a good idea. Unless the enterprise is a very technology-focused organisation already used to operating infrastructure in an automated way - a major telco, for example - it can be extremely hard to attract and retain the right staff to make this work and to reach the scale required to make it affordable. Any scaled cloud is one of the most complex and critical distributed systems around, building and running it is not a simple proposition, and you will be competing with the major clouds and exciting start-ups for the best infrastructure automation engineers. Even though pre-integrated, appliance-style cloud hardware is making delivery of private clouds easier and cheaper (relatively) from a technical perspective, they still require effective and ongoing investment and capacity management. Leading clouds operate on a massive scale and are in a position to squeeze out efficiencies on a level unimaginable to even the largest “typical” enterprise. I have seen many examples of failed private cloud projects, and few successes. On the other hand, I’ve regularly seen very successful adoptions of public cloud.

More viable is to use a cloud-independent Platform-as-a-Service such as Cloud Foundry, OpenShift or one of the many smaller players in the space such as Deis, Tsuru, Convox, Otto etc. to provide a platform-level abstraction over multiple clouds. Docker container clusters such as Kubernetes provide a similar abstraction at the container level. Choosing one isn’t easy as it’s a fast moving space - GDS have helpfully published their selection criteria and results which may be a starter for ten. There is still substantial overhead in setting these up and operating them, and it moves away from the ‘single pane of glass’/‘single throat to choke’ model that using equivalent cloud-specific services provides.

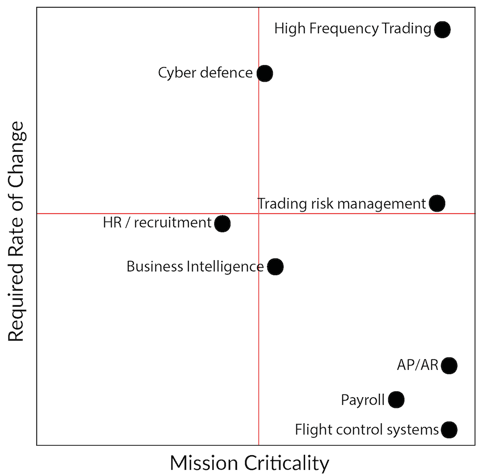

The final piece of the puzzle is that not all applications, products and services are equivalent. Gartner has their concept of bimodal IT, but I think this is a little simplistic - rate of change and mission criticality are distinct dimensions that bimodal folds into one. Here’s a diagram I drew for the BJSS Enterprise Agile book:

Most of the bottom half of the diagram is likely to migrate to SaaS packages over time. The far bottom-right may not, due to the business continuity concerns noted above. The top-left quadrant includes a large subsection of “digital” apps. If your application needs to be highly reactive to market demands but does not have a long life span or is not mission critical, then aggressively leveraging high value cloud services makes sense.

The hardest quadrant is the top-right. Make sure you know what applications fall into this category, consider the trade-offs carefully, and make sure everyone understands them and that they are properly recorded.

Share this post

Twitter

Google+

Facebook

Reddit

LinkedIn

StumbleUpon

Pinterest

Email